DEFINITIONS

Screening has been defined as the presumptive identification of unrecognized disease or defect by the application of tests, examinations, or other procedures that can be applied rapidly. Screening tests sort out apparently well persons who probably have a disease from those who probably do not.2 By this definition, screening is conducted on asymptomatic, presumably healthy individuals who are tested to determine whether they have high or low probabilities of disease. The results of screening, therefore, are tentative, and neither positive nor negative findings can be considered conclusive. Positive screening results do not necessarily imply disease; they only indicate the need for additional evaluation, typically by more extensive and more expensive methods. Similarly, negative screening results cannot be interpreted as conclusive evidence of the absence of disease.

Screening activities can be classified as “mass” or “selective.”3 Mass screening, or population-based screening, is provided on a large scale to unselected populations, such as visitors to health fairs, shopping centers, or other ad hoc settings. Selective screening, or high-risk screening, is limited to persons who have already been identified as being at an increased risk for a particular disease. Tools suitable for mass screening are typically less invasive and less expensive than screening tools to be applied in select population settings.4

Screening may be performed on volunteers in different community settings or on patients seeking health care for unrelated symptoms. Identifying previously unrecognized disease by screening among patients consulting for an unrelated disease (e.g., glaucoma screening of a patient consulting for myopia) is sometimes defined as case finding. When implementing a screening program, it is appropriate to provide follow-up evaluation for persons with positive screening tests. Screening is more likely to identify cases with a long preclinical phase of the disease than cases with a short preclinical phase.5

CRITERIA

Screening raises ethical, clinical, as well as scientific issues, and the decision to screen for a particular disease must be evaluated carefully. Several general principles have been proposed to assist in this evaluation.3,6–9 To be suitable for population-based screening, a disease should meet the following criteria:

- The disease should have an important effect on morbidity or mortality.

- The disease should have a sufficiently high prevalence within the target

population to justify screening.

- The disease should have a natural history that is adequately understood.

- Treatment of the disease should be acceptable, effective, and available.

- The outcome of disease would be better if treatment were initiated before

the usual time of diagnosis.

- Screening tests should be acceptable, reliable, and valid (i.e., high

sensitivity and specificity) and have a reasonable cost.

- The cost of screening and subsequent follow-up evaluation and care

should be less than the cost of providing treatment and other services

at the usual time of diagnosis.

The rationale for each criterion is discussed below.

Morbidity and Mortality

A condition merits screening only if it has an important public health impact and is serious enough to affect the quality or quantity of life.

Prevalence

Ideally, screening should be aimed at detecting relatively common conditions within the population targeted. As the prevalence of a condition increases in the target population, screening yields more cases and the cost per case detected decreases.9

Natural History

Knowledge of the course of the disease process is essential for screening.10 In some conditions, there is a marked overlap in measurements between diseased and nondiseased persons, and the diagnosis is not clear cut. To be suitable for screening, a disease must have a clearly recognized biologic onset and a diagnosis that can be confirmed by accepted criteria. The disease should also have a latent, asymptomatic stage before it becomes clinically apparent (see Fig. 1). The presence of this asymptomatic stage will permit detection and intervention before the usual time of diagnosis. The time lapse between early detection by screening and the usual time of diagnosis is known as the lead time.8,11

|

Effectiveness and Availability of Treatment

Screening is of value when the disease can be effectively treated or controlled. With incurable hereditary conditions, control of disease may be possible with counseling.12

In addition to being effective, the treatment must be accessible. Screening is justified only when facilities for diagnosis and treatment are available to persons with positive screening results. A major issue to emphasize, because it is often overlooked, is that resources for effective follow-up must be an integral part of a screening program.

Better Outcome with Early Treatment

The rationale for screening is that early detection and treatment will improve disease outcome. This goal can be attained only if intervention in the asymptomatic stage (Fig. 1) produces better results than when treatment is begun after the usual time of diagnosis.

Screening Tests

An important prerequisite for a screening test is its acceptability to the person being screened and to those performing and interpreting the test. Other requirements are simplicity, so the test can be easily administered and reliability or reproducibility, so that consistent results may be expected on repeated measurements by the same or a different observer. An important requirement is test validity (i.e., the ability to correctly identify diseased and nondiseased persons).1,4 Validity is measured by sensitivity and specificity (see Table 1). Sensitivity is the ability of a test to identify cases (i.e., diseased persons) correctly. Thus, a screening test with 90% sensitivity will be positive in 90 of 100 cases screened. Specificity is the ability of a test to identify noncases (i.e., nondiseased persons) correctly. Thus, a test with 95% specificity will be negative in 95 of every 100 noncases tested. In the example given in Table 1, Test A has a 70% sensitivity and 80% specificity.

TABLE 1. Example of Sensitivity, Specificity, and Predictive Values of a Screening

Test, Assuming an Eye Disease with a Prevalence of 10% in the

Target Population

| Results of Screening Test A | Eye Disease X | ||

| Present | Absent | Total | |

| Positive test | 70 (a) True positives | 180 (b) False positives | 250 (a + b) All positive tests |

| Negative test | 30 (c) False negatives | 720 (d) True negatives | 750 (c + d) All negative tests |

| Total | 100 (a + c) Cases | 900 (b + d) Noncases | 1000 (a + b + c + d) Total |

Sensitivity and Specificity

The denominator for these calculations is the number of cases (a + c) or noncases (b + d) of Eye Disease X.

- Sensitivity is the proportion of true positives (cases with positive

screening tests) among all cases = a/a + c (70/100= 0.70 or 70% sensitivity).

- Specificity is the proportion of true negatives (noncases with negative

screening tests) among all noncases = d/b + d (720/900= 0.80 or 80% specificity).

Predictive Values

The denominator for these calculations is the number of persons with positive tests (a+b) or negative tests (c + d).

- Positive predictive value is the proportion of true positives (cases

with positive screening tests) among all those with positive tests = a/a + b (70/250= 0.28 or 28%).

- Negative predictive value is the proportion of true negatives (noncases

with negative screening tests) among all those with negative

tests = d/c + d (720/750= 0.96 or 96%).

Sensitivity and specificity should always be evaluated together. A test with high sensitivity will detect most of the cases and will thus have few false-negative results. However, if the same test has low specificity, many false-positive results will occur and lead to overreferrals. In contrast, a test with low sensitivity and high specificity will have many false-negative results but few false-positive results.

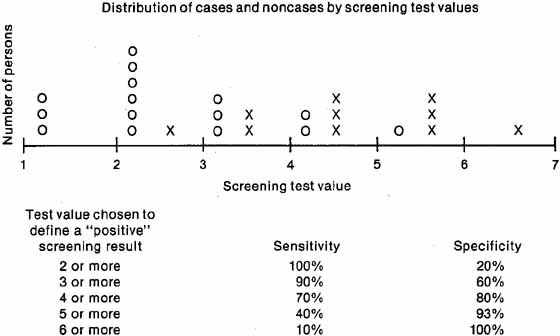

Ideally, a test should have high sensitivity and high specificity, but this goal is difficult to achieve in practice. Because the test results of diseased and nondiseased persons usually overlap, some cases have low (negative) test values and some noncases have high (positive) values (see Fig. 2). The specific test value chosen as a cutoff to define a “positive” screening result will affect both the sensitivity and the specificity. If a low cutoff value is chosen, the sensitivity will be high, because most cases will have “positive” screening results and will be referred for further diagnostic examinations. The specificity, however, will be low because many noncases will also be referred. Increasing the test value chosen as “positive” to trigger referral will increase the specificity, at the expense of the sensi-tivity. The decision to select a specific test value to determine referral depends on the disease being detected. If the failure to detect cases has serious consequences, for example, nondetection of malignant disease, a low test value should be chosen for referral, thus increasing sensitivity even though the specificity will decrease. If an excessive number of overreferrals is unacceptable, for example, when follow-up of screening requires invasive procedures, a high screening test value should be chosen for referral to increase specificity, although the sensitivity will decrease.

|

The evaluation of tests also includes “predictive values.” Sensitivity and specificity evaluate the ability of a test to separate cases correctly from noncases and are measures of validity, but predictive values are not. The predictive value of a positive test refers to the percentage of cases found among all those with positive tests (Table 1). In the example in Table 1, where Disease X had a prevalence of 10%, 250 persons had positive tests and 70 truly had the disease, resulting in a positive predictive value of 28% for Test A in this population. The predictive value of a negative test is defined as the percentage of noncases found among persons with negative tests (Table 1). Therefore, if 720 of 750 persons with negative tests were noncases, the negative predictive value was 96%. Predictive values depend not only on sensitivity and specificity of the test, but also on the prevalence of disease in the population screened. As the prevalence of the disease increases, the positive predictive value increases (see Table 2). Therefore, false-positive results are reduced when screening is performed in populations where the disease is common; conversely, screening leads to a large number of overreferrals when carried out in populations where the disease is rare. For this reason, the cost per case detected increases when the population screened has a low prevalence of disease.

TABLE 2. Positive Predictive Values by Disease Prevalence at Selected Levels of

Sensitivity and Specificity

| Disease Prevalence | ||||||

| Sensitivity | Specificity | 0.5% | 1% | 2% | 5% | 10% |

| 50% | 50% | 0.5% | 1% | 2% | 5% | 10% |

| 50% | 90% | 2% | 5% | 9% | 21% | 36% |

| 75% | 50% | 0.7% | 1% | 3% | 7% | 14% |

| 90% | 95% | 8% | 15% | 27% | 49% | 67% |

TWO-STAGE SCREENING.

Sometimes a screening program is multiphasic, that is, involves a series of sequential tests.4 Usually, an initial screening test that is inexpensive and noninvasive is performed first; then those with positive results are retested using a more accurate test, which is typically more expensive and/or more invasive.4 In this situation, sensitivity and specificity of the two screening tests can be combined and used in sequence, being referred to as net sensitivity and net specificity.4 These concepts are illustrated through the example that assumes multiphasic screening for Disease X, first using Test A (see Table 1) and then applying Test B (see Table 3) to the subset that screened positive with Test A.

TABLE 3. Example of Two-Stage Screening, Net Sensitivity and Net Specificity

for a Sample Eye Disease X, Based on Screening Test A Followed by

Screening Test B

| Result of Screening | Disease | ||

| Test B | Present | Absent | Total |

| Positive | 63 True Positives | 18 False Positives | 81 |

| Negative | 7 False negatives | 162 True negatives | 169 |

| Total | 70 Cases | 180 Noncases | 250 |

| This number is obtained from Table 1 and represents the true positives (tested positive and had the disease) from Test A | This number is obtained from Table 1 and represents the false positives (tested positive and did not have the disease) from Test A | ||

(Test B: Sensitivity = 90%; specificity = 90%)

Net Sensitivity

True positives from Test B: 63 = 63%

All cases in population for Test A: 100

Net specificity:

True negatives from Test A + True negatives from Test B: 720 +162 = 98%

All noncases in population for Test A: 900

Net sensitivity and net specificity are derived in two stages. Stage 1 evaluates the initial screening test for the total population, as presented for Test A in Table 1. For Stage 2, individuals who tested positive by Test A are rescreened using Test B. In the example, the 250 individuals who tested positive, as in Table 1, would be retested with Test B, which has 90% sensitivity and 90% specificity as indicated in Table 3. To calculate net sensitivity, the numerator is the number of individuals who were identified as true positives (i.e., tested positive and have the disease) by Test B, which is 63 in the example, and the denominator includes the total number of cases (i.e., the sum of true positives and false negatives) in the target population initially screened with Test A, which is 100 in the example. Therefore, net sensitivity is 63% and is lower than the sensitivity of either test, being equivalent to the sensitivity of Test A times the sensitivity of Test B. Net specificity is calculated by defining the numerator as the sum of the true negatives (i.e., tested negative and do not have the disease) identified by Tests A (n = 720) and B (n = 162) and the denominator as the total number of noncases (i.e., a sum of true negatives and false positives) in the population (n = 900), evaluated by Test A. Therefore, the net specificity is 98%, which is higher than the specificity of Test A and Test B, resulting in an overall gain by using the two screening tests. As demonstrated by this example, retesting individuals who initially test positive will increase specificity, thus decreasing the likelihood of over-referrals due to false-positive tests. Positive predictive value also increases by retesting persons in this group, because they have a higher prevalence of the disease.

Cost-Effectiveness

Aside from the purely humanitarian and social benefits of preventing morbidity, the cost-benefit of screening must be evaluated. Although screening may ultimately reduce the public health impact of a disease and bring about economic savings, the screening process itself generates costs. A common problem is the lack of inexpensive tests that can effectively separate cases from noncases. In addition to the resources expended in the testing process itself, these costs include the follow-up and diagnosis of persons with positive tests, as well as the costs of treating all the newly detected cases. The errors in classifying persons as “positives” and “negatives” also have a cost. Besides being subjected to unnecessary diagnostic tests for a condition they do not have, persons with false-positive tests may suffer other undesirable consequences, such as anxiety and worry at being considered a disease suspect. Additionally, costs are incurred by persons with false-negative screening tests, who may derive false reassurance from screening. Thus, screening for eye conditions may be justified if the cost of a screening program and associated services is less than the costs incurred when the disease is detected at the usual time of diagnosis, for example, the costs of providing long-term services for the visually disabled. It is also justified if earlier diagnosis will diminish psychologic and/or other negative consequences associated with the ophthalmologic condition.